Abstract

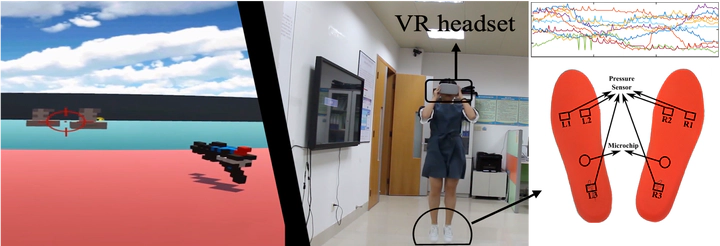

This work explores the use of foot gestures for locomotion in virtual environments. Foot gestures are represented as the distribution of plantar pressure and detected by three sparsely-located sensors on each insole. The Long Short- Term Memory model is chosen as the classifier to recognize the performer’s foot gesture based on the captured signals of pressure information. The trained classifier directly takes the noisy and sparse input of sensor data and identifies seven categories of foot gestures (stand, walk forward/backward, run, jump, slide left and right) without the manual definition of signal features. This classifier is capable of recognizing the foot gestures, even with the existence of large sensor-specific, inter-person and intra-person variations. Results show that an accuracy of ∼80% can be achieved across different users with different shoe sizes and ∼85% for users with the same shoe size. A novel method, Dual-Check Till Consensus, is proposed to reduce the latency of gesture recognition from 2 seconds to 0.5 seconds and increase the accuracy to over 97%. This method offers a promising solution to achieve lower latency and higher accuracy at a minor cost of computation workload. The characteristics of high accuracy and fast classification of our method could lead to wider applications of using foot patterns for human-computer interaction.