Real-World Blind Super-Resolution via Feature Matching with Implicit High-Resolution Priors

Abstract

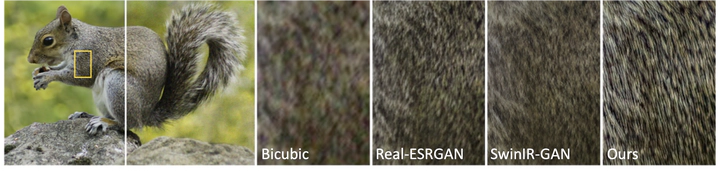

A key challenge of blind image super resolution is to recover realistic textures for low-resolution images with unknown degradations. Most recent works completely rely on the generative ability of GANs, which are difficult to train. Other methods resort to high-resolution image references that are usually not available. In this work, we propose a novel framework, denoted as QuanTexSR, to restore realistic textures with the Quantized Texture Priors encoded in Vector Quantized GAN. The QuanTexSR generates textures by aligning the textureless content features to the quantized feature vectors, i.e., a pretrained feature codebook. Specifically, QuanTexSR formulates the texture generation as a feature matching problem between textureless features and a pretrained feature codebook. The final textures are then generated by the quantized features from the codebook. Since features in the codebook have shown the ability to generate natural textures in the pretrain stage, QuanTexSR can generate rich and realistic textures with the pretrained codebook as texture priors. Moreover, we propose a semantic regularization technique that regularizes the pre-training of the codebook using clusters of features extracted from the pretrained VGG19 network. This further improves texture generation with semantic context. Experiments demonstrate that the proposed QuanTexSR can generate competitive or better textures than previous approaches. Code will be made publicly available.