Abstract

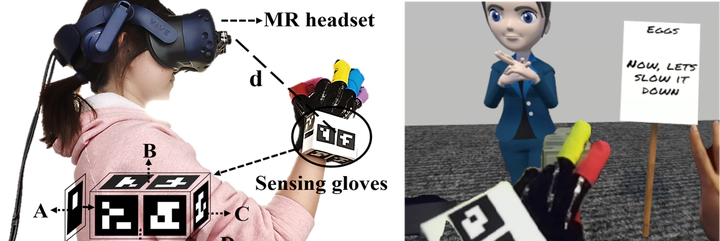

This paper presents a holistic system to scale up the teaching and learning of vocabulary words of American Sign Language (ASL). The system leverages the most recent mixed-reality technology to allow the user to perceive her own hands in an immersive learning environment with first- and third-person views for motion demonstration and practice. Precise motion sensing is used to record and evaluate motion, providing real-time feedback tailored to the specific learner. As part of this evaluation, learner motions are matched to features derived from the Hamburg Notation System (HNS) developed by sign-language linguists. We develop a prototype to evaluate the efficacy of mixed-reality-based interactive motion teaching. Results with 60 participants show a statistically significant improvement in learning ASL signs when using our system, in comparison to traditional desktop-based, non-interactive learning. We expect this approach to ultimately allow teaching and guided practice of thousands of signs.